AI模型 - 生成对抗网络GAN(1)

不功利,不装X,做最纯粹的探讨。

今日香港挂起了8号台风,外部供应全部切断,所幸尚有余粮。1包泡面下肚,开撸吴恩达最新教程生成对抗网络GAN。基于Pytorch,本文从理论到实践全面剖析生成对抗网络。

看上面这张图,你觉得这个人真实不?好像很真实,但是这个人并不存在,她只是GAN做出来的一张图像而已。

或许在之前你已经听过很多人提起过GAN,但是GAN可以做什么?

生成一件艺术画

生成一个更好看的你的画像

生成一只从来没有存在过的猫画像

是不是很魔幻~最神奇的是,你可以享有足够的自由去创造!就像大家去IKEA买家具的时候,成品家具很难100%满足你的预期,那么我就自己造一个!

01

GAN简介

首先看下GAN的全称:生成对抗网络Generative Adversarial Networks。

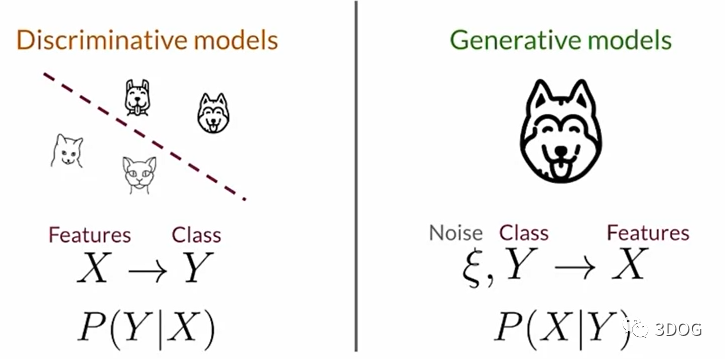

逐步剖析,首先看什么叫生成(Generative)模型。生成模型的对应面是判别(discriminative)模型。判别模型经常被用于分类问题,例如从一堆猫狗的图片中判别猫狗。所用到的方法是提取特征,然后分类。生成模型的方法则会考虑到噪声的影响,故而生成模型则逆向思考问题,根据分类混合上随机噪声,生成特征。

上面的描述很粗糙,相信你一定很困惑,到底是怎么实现逆向生成特征的呢?我们继续向内看细节。

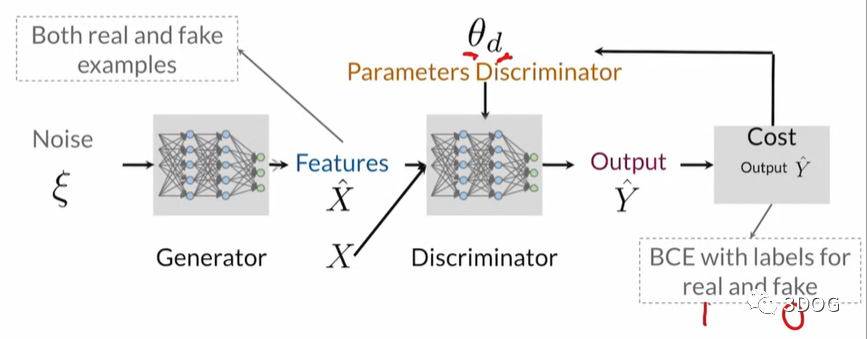

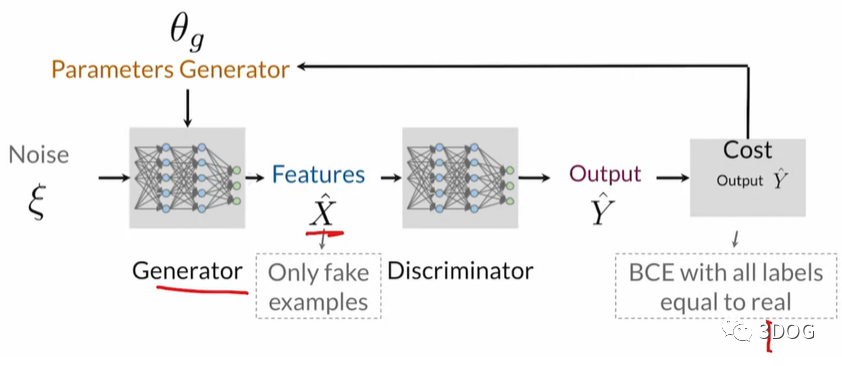

GAN的网络中有生成器(generator)和判别器(discriminator)。在生成器里输入随机噪声,根据内部规则生成一张对应类别的图。但是生成器输出的图效果怎么样呢?这就需要判别器的参与。判别器是隐藏的,它的作用是根据不同类别判别输出的图真假。可以看出,生成器和判别器是不断对抗,互相学习的,所以才称作对抗性(Adversarial)。那么生成器和判别器将会对抗到什么时候呢?直到判别器丧失作用。也就是说生成器能适应各种随机噪声的输入的时候,模型停止对抗。

稍微清晰了一点,但是还不够透彻了解。继续深入。

生成器的目标是尽可能输出真实图像,判别器的目标是判断哪些生成器的输出是假的。当然啦,判别器一开始是一无所知的。所以我们需要不断给判别器反馈,让它具备初步判别能力。随着对抗的深入,生成器越来越精通‘造假’,判别器的判别标准会越来越细。同样,一开始生成器也是对生成真图像一无所知的,现在生成器能做的就是胡乱地画。但是随着判别器的反馈,生成器逐渐能画得越来越像真的。

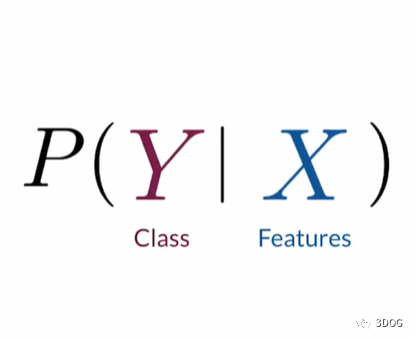

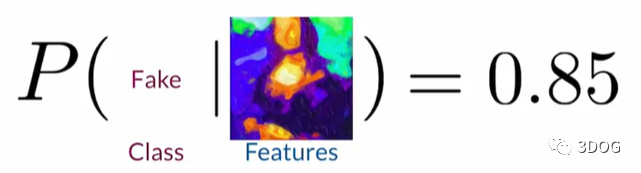

那么判别器是怎么运作呢?

例如判断一幅画是不是蒙娜丽莎真迹,输入图像特征,输出分类的可能性。

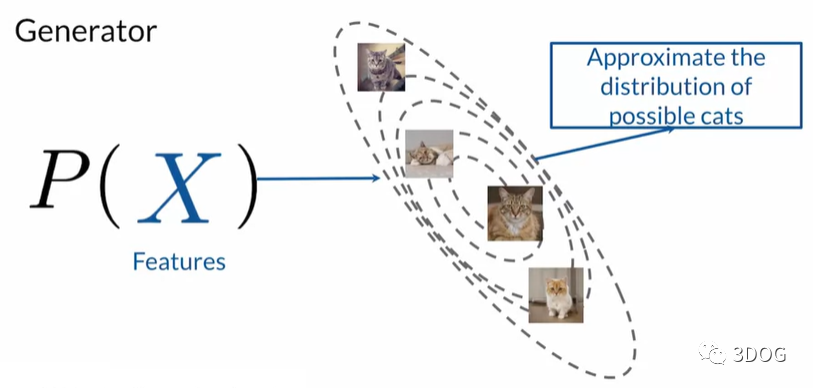

生成器是怎么运作呢?

例如这里想要造出一张足够真实的猫图。

整体看结构图如下:

02

Pytorch实现

import torchfrom torch import nnfrom tqdm.auto import tqdmfrom torchvision import transformsfrom torchvision.datasets import MNIST # Training datasetfrom torchvision.utils import make_gridfrom torch.utils.data import DataLoaderimport matplotlib.pyplot as plttorch.manual_seed(0) # Set for testing purposes, please do not change!def show_tensor_images(image_tensor, num_images=25, size=(1, 28, 28)):'''Function for visualizing images: Given a tensor of images, number of images, andsize per image, plots and prints the images in a uniform grid.'''image_unflat = image_tensor.detach().cpu().view(-1, *size)image_grid = make_grid(image_unflat[:num_images], nrow=5)plt.imshow(image_grid.permute(1, 2, 0).squeeze())plt.show()

# UNQ_C1 (UNIQUE CELL IDENTIFIER, DO NOT EDIT)# GRADED FUNCTION: get_generator_blockdef get_generator_block(input_dim, output_dim):'''Function for returning a block of the generator's neural networkgiven input and output dimensions.Parameters:input_dim: the dimension of the input vector, a scalaroutput_dim: the dimension of the output vector, a scalarReturns:a generator neural network layer, with a linear transformationfollowed by a batch normalization and then a relu activation'''return nn.Sequential(nn.Linear(input_dim, output_dim),nn.BatchNorm1d(output_dim),nn.ReLU(inplace=True),)

# Verify the generator block functiondef test_gen_block(in_features, out_features, num_test=1000):block = get_generator_block(in_features, out_features)# Check the three partsassert len(block) == 3assert type(block[0]) == nn.Linearassert type(block[1]) == nn.BatchNorm1dassert type(block[2]) == nn.ReLU# Check the output shapetest_input = torch.randn(num_test, in_features)test_output = block(test_input)assert tuple(test_output.shape) == (num_test, out_features)assert test_output.std() > 0.55assert test_output.std() < 0.65test_gen_block(25, 12)test_gen_block(15, 28)print("Success!")

# UNQ_C2 (UNIQUE CELL IDENTIFIER, DO NOT EDIT)# GRADED FUNCTION: Generatorclass Generator(nn.Module):'''Generator ClassValues:z_dim: the dimension of the noise vector, a scalarim_dim: the dimension of the images, fitted for the dataset used, a scalar(MNIST images are 28 x 28 = 784 so that is your default)hidden_dim: the inner dimension, a scalar'''def __init__(self, z_dim=10, im_dim=784, hidden_dim=128):super(Generator, self).__init__()# Build the neural networkself.gen = nn.Sequential(get_generator_block(z_dim, hidden_dim),get_generator_block(hidden_dim, hidden_dim * 2),get_generator_block(hidden_dim * 2, hidden_dim * 4),get_generator_block(hidden_dim * 4, im_dim),nn.Linear(im_dim, im_dim),nn.Sigmoid())def forward(self, noise):'''Function for completing a forward pass of the generator: Given a noise tensor,returns generated images.Parameters:noise: a noise tensor with dimensions (n_samples, z_dim)'''return self.gen(noise)# Needed for gradingdef get_gen(self):'''Returns:the sequential model'''return self.gen

# Verify the generator classdef test_generator(z_dim, im_dim, hidden_dim, num_test=10000):gen = Generator(z_dim, im_dim, hidden_dim).get_gen()# Check there are six modules in the sequential partassert len(gen) == 6test_input = torch.randn(num_test, z_dim)test_output = gen(test_input)# Check that the output shape is correctassert tuple(test_output.shape) == (num_test, im_dim)assert test_output.max() < 1, "Make sure to use a sigmoid"assert test_output.min() > 0, "Make sure to use a sigmoid"assert test_output.std() > 0.05, "Don't use batchnorm here"assert test_output.std() < 0.15, "Don't use batchnorm here"test_generator(5, 10, 20)test_generator(20, 8, 24)print("Success!")

# UNQ_C3 (UNIQUE CELL IDENTIFIER, DO NOT EDIT)# GRADED FUNCTION: get_noisedef get_noise(n_samples, z_dim, device='cpu'):'''Function for creating noise vectors: Given the dimensions (n_samples, z_dim),creates a tensor of that shape filled with random numbers from the normal distribution.Parameters:n_samples: the number of samples to generate, a scalarz_dim: the dimension of the noise vector, a scalardevice: the device type'''return torch.randn(n_samples, z_dim, device = devic)

# Verify the noise vector functiondef test_get_noise(n_samples, z_dim, device='cpu'):noise = get_noise(n_samples, z_dim, device)# Make sure a normal distribution was usedassert tuple(noise.shape) == (n_samples, z_dim)assert torch.abs(noise.std() - torch.tensor(1.0)) < 0.01assert str(noise.device).startswith(device)test_get_noise(1000, 100, 'cpu')if torch.cuda.is_available():test_get_noise(1000, 32, 'cuda')print("Success!")

# UNQ_C4 (UNIQUE CELL IDENTIFIER, DO NOT EDIT)# GRADED FUNCTION: get_discriminator_blockdef get_discriminator_block(input_dim, output_dim):'''Discriminator BlockFunction for returning a neural network of the discriminator given input and output dimensions.Parameters:input_dim: the dimension of the input vector, a scalaroutput_dim: the dimension of the output vector, a scalarReturns:a discriminator neural network layer, with a linear transformationfollowed by an nn.LeakyReLU activation with negative slope of 0.2(https://pytorch.org/docs/master/generated/torch.nn.LeakyReLU.html)'''return nn.Sequential(nn.Linear(input_dim,output_dim),nn.LeakyReLU(negative_slope = 0.2, inplace = True))

# Verify the discriminator block functiondef test_disc_block(in_features, out_features, num_test=10000):block = get_discriminator_block(in_features, out_features)# Check there are two partsassert len(block) == 2test_input = torch.randn(num_test, in_features)test_output = block(test_input)# Check that the shape is rightassert tuple(test_output.shape) == (num_test, out_features)# Check that the LeakyReLU slope is about 0.2assert -test_output.min() / test_output.max() > 0.1assert -test_output.min() / test_output.max() < 0.3assert test_output.std() > 0.3assert test_output.std() < 0.5test_disc_block(25, 12)test_disc_block(15, 28)print("Success!")

# UNQ_C5 (UNIQUE CELL IDENTIFIER, DO NOT EDIT)# GRADED FUNCTION: Discriminatorclass Discriminator(nn.Module):'''Discriminator ClassValues:im_dim: the dimension of the images, fitted for the dataset used, a scalar(MNIST images are 28x28 = 784 so that is your default)hidden_dim: the inner dimension, a scalar'''def __init__(self, im_dim=784, hidden_dim=128):super(Discriminator, self).__init__()self.disc = nn.Sequential(get_discriminator_block(im_dim, hidden_dim * 4),get_discriminator_block(hidden_dim * 4, hidden_dim * 2),get_discriminator_block(hidden_dim * 2, hidden_dim),nn.Linear(hidden_dim, 1))def forward(self, image):'''Function for completing a forward pass of the discriminator: Given an image tensor,returns a 1-dimension tensor representing fake/real.Parameters:image: a flattened image tensor with dimension (im_dim)'''return self.disc(image)# Needed for gradingdef get_disc(self):'''Returns:the sequential model'''return self.disc

# Verify the discriminator classdef test_discriminator(z_dim, hidden_dim, num_test=100):disc = Discriminator(z_dim, hidden_dim).get_disc()# Check there are three partsassert len(disc) == 4# Check the linear layer is correcttest_input = torch.randn(num_test, z_dim)test_output = disc(test_input)assert tuple(test_output.shape) == (num_test, 1)# Make sure there's no sigmoidassert test_input.max() > 1assert test_input.min() < -1test_discriminator(5, 10)test_discriminator(20, 8)print("Success!")

到这里准备工作基本完成。下面构建训练模型。

# Set your parameterscriterion = nn.BCEWithLogitsLoss()n_epochs = 200z_dim = 64display_step = 500batch_size = 128lr = 0.00001device = 'cuda'# Load MNIST dataset as tensorsdataloader = DataLoader(MNIST('.', download=False, transform=transforms.ToTensor()),batch_size=batch_size,shuffle=True)

gen = Generator(z_dim).to(device)gen_opt = torch.optim.Adam(gen.parameters(), lr=lr)disc = Discriminator().to(device)disc_opt = torch.optim.Adam(disc.parameters(), lr=lr)

# UNQ_C6 (UNIQUE CELL IDENTIFIER, DO NOT EDIT)# GRADED FUNCTION: get_disc_lossdef get_disc_loss(gen, disc, criterion, real, num_images, z_dim, device):'''Return the loss of the discriminator given inputs.Parameters:gen: the generator model, which returns an image given z-dimensional noisedisc: the discriminator model, which returns a single-dimensional prediction of real/fakecriterion: the loss function, which should be used to comparethe discriminator's predictions to the ground truth reality of the imagesfake = 0, real = 1)real: a batch of real imagesnum_images: the number of images the generator should produce,which is also the length of the real imagesz_dim: the dimension of the noise vector, a scalardevice: the device typeReturns:disc_loss: a torch scalar loss value for the current batch'''# These are the steps you will need to complete:# 1) Create noise vectors and generate a batch (num_images) of fake images.# Make sure to pass the device argument to the noise.# 2) Get the discriminator's prediction of the fake image# and calculate the loss. Don't forget to detach the generator!# (Remember the loss function you set earlier -- criterion. You need a# 'ground truth' tensor in order to calculate the loss.# For example, a ground truth tensor for a fake image is all zeros.)# 3) Get the discriminator's prediction of the real image and calculate the loss.# 4) Calculate the discriminator's loss by averaging the real and fake loss# and set it to disc_loss.# Note: Please do not use concatenation in your solution. The tests are being updated to# support this, but for now, average the two losses as described in step (4).# *Important*: You should NOT write your own loss function here - use criterion(pred, true)!#### START CODE HERE ####fake_images = gen(get_noise(num_images, z_dim, device=device))fake_loss = criterion(disc(fake_images),torch.zeros((num_images,1),device = device))real_loss = criterion(disc(real),torch.ones((num_images,1),device = device))disc_loss = ( fake_loss + real_loss ) / 2.0#### END CODE HERE ####return disc_loss

def test_disc_reasonable(num_images=10):# Don't use explicit casts to cuda - use the device argumentimport inspect, relines = inspect.getsource(get_disc_loss)assert (re.search(r"to\(.cuda.\)", lines)) is Noneassert (re.search(r"\.cuda\(\)", lines)) is Nonez_dim = 64gen = torch.zeros_likedisc = lambda x: x.mean(1)[:, None]criterion = torch.mul # Multiplyreal = torch.ones(num_images, z_dim)disc_loss = get_disc_loss(gen, disc, criterion, real, num_images, z_dim, 'cpu')assert torch.all(torch.abs(disc_loss.mean() - 0.5) < 1e-5)gen = torch.ones_likecriterion = torch.mul # Multiplyreal = torch.zeros(num_images, z_dim)assert torch.all(torch.abs(get_disc_loss(gen, disc, criterion, real, num_images, z_dim, 'cpu')) < 1e-5)gen = lambda x: torch.ones(num_images, 10)disc = lambda x: x.mean(1)[:, None] + 10criterion = torch.mul # Multiplyreal = torch.zeros(num_images, 10)assert torch.all(torch.abs(get_disc_loss(gen, disc, criterion, real, num_images, z_dim, 'cpu').mean() - 5) < 1e-5)gen = torch.ones_likedisc = nn.Linear(64, 1, bias=False)real = torch.ones(num_images, 64) * 0.5disc.weight.data = torch.ones_like(disc.weight.data) * 0.5disc_opt = torch.optim.Adam(disc.parameters(), lr=lr)criterion = lambda x, y: torch.sum(x) + torch.sum(y)disc_loss = get_disc_loss(gen, disc, criterion, real, num_images, z_dim, 'cpu').mean()disc_loss.backward()assert torch.isclose(torch.abs(disc.weight.grad.mean() - 11.25), torch.tensor(3.75))def test_disc_loss(max_tests = 10):z_dim = 64gen = Generator(z_dim).to(device)gen_opt = torch.optim.Adam(gen.parameters(), lr=lr)disc = Discriminator().to(device)disc_opt = torch.optim.Adam(disc.parameters(), lr=lr)num_steps = 0for real, _ in dataloader:cur_batch_size = len(real)real = real.view(cur_batch_size, -1).to(device)### Update discriminator #### Zero out the gradient before backpropagationdisc_opt.zero_grad()# Calculate discriminator lossdisc_loss = get_disc_loss(gen, disc, criterion, real, cur_batch_size, z_dim, device)assert (disc_loss - 0.68).abs() < 0.05# Update gradientsdisc_loss.backward(retain_graph=True)# Check that they detached correctlyassert gen.gen[0][0].weight.grad is None# Update optimizerold_weight = disc.disc[0][0].weight.data.clone()disc_opt.step()new_weight = disc.disc[0][0].weight.data# Check that some discriminator weights changedassert not torch.all(torch.eq(old_weight, new_weight))num_steps += 1if num_steps >= max_tests:breaktest_disc_reasonable()test_disc_loss()print("Success!")

# UNQ_C7 (UNIQUE CELL IDENTIFIER, DO NOT EDIT)# GRADED FUNCTION: get_gen_lossdef get_gen_loss(gen, disc, criterion, num_images, z_dim, device):'''Return the loss of the generator given inputs.Parameters:gen: the generator model, which returns an image given z-dimensional noisedisc: the discriminator model, which returns a single-dimensional prediction of real/fakecriterion: the loss function, which should be used to comparethe discriminator's predictions to the ground truth reality of the imagesfake = 0, real = 1)num_images: the number of images the generator should produce,which is also the length of the real imagesz_dim: the dimension of the noise vector, a scalardevice: the device typeReturns:gen_loss: a torch scalar loss value for the current batch'''# These are the steps you will need to complete:# 1) Create noise vectors and generate a batch of fake images.# Remember to pass the device argument to the get_noise function.# 2) Get the discriminator's prediction of the fake image.# 3) Calculate the generator's loss. Remember the generator wants# the discriminator to think that its fake images are real# *Important*: You should NOT write your own loss function here - use criterion(pred, true)!= gen(get_noise(num_images,z_dim, device = device))out = disc(fake_images)gen_loss = criterion(out, torch.ones((num_images,1),device = device))return gen_loss

def test_gen_reasonable(num_images=10):# Don't use explicit casts to cuda - use the device argumentimport inspect, relines = inspect.getsource(get_gen_loss)assert (re.search(r"to\(.cuda.\)", lines)) is Noneassert (re.search(r"\.cuda\(\)", lines)) is Nonez_dim = 64gen = torch.zeros_likedisc = nn.Identity()criterion = torch.mul # Multiplygen_loss_tensor = get_gen_loss(gen, disc, criterion, num_images, z_dim, 'cpu')assert torch.all(torch.abs(gen_loss_tensor) < 1e-5)#Verify shape. Related to gen_noise parametrizationassert tuple(gen_loss_tensor.shape) == (num_images, z_dim)gen = torch.ones_likedisc = nn.Identity()criterion = torch.mul # Multiplyreal = torch.zeros(num_images, 1)gen_loss_tensor = get_gen_loss(gen, disc, criterion, num_images, z_dim, 'cpu')assert torch.all(torch.abs(gen_loss_tensor - 1) < 1e-5)#Verify shape. Related to gen_noise parametrizationassert tuple(gen_loss_tensor.shape) == (num_images, z_dim)def test_gen_loss(num_images):z_dim = 64gen = Generator(z_dim).to(device)gen_opt = torch.optim.Adam(gen.parameters(), lr=lr)disc = Discriminator().to(device)disc_opt = torch.optim.Adam(disc.parameters(), lr=lr)gen_loss = get_gen_loss(gen, disc, criterion, num_images, z_dim, device)# Check that the loss is reasonableassert (gen_loss - 0.7).abs() < 0.1gen_loss.backward()old_weight = gen.gen[0][0].weight.clone()gen_opt.step()new_weight = gen.gen[0][0].weightassert not torch.all(torch.eq(old_weight, new_weight))test_gen_reasonable(10)test_gen_loss(18)print("Success!")

开始训练~

# UNQ_C8 (UNIQUE CELL IDENTIFIER, DO NOT EDIT)# GRADED FUNCTION:cur_step = 0mean_generator_loss = 0mean_discriminator_loss = 0test_generator = True # Whether the generator should be testedgen_loss = Falseerror = Falsefor epoch in range(n_epochs):# Dataloader returns the batchesfor real, _ in tqdm(dataloader):cur_batch_size = len(real)# Flatten the batch of real images from the datasetreal = real.view(cur_batch_size, -1).to(device)### Update discriminator #### Zero out the gradients before backpropagationdisc_opt.zero_grad()# Calculate discriminator lossdisc_loss = get_disc_loss(gen, disc, criterion, real, cur_batch_size, z_dim, device)# Update gradientsdisc_loss.backward(retain_graph=True)# Update optimizerdisc_opt.step()# For testing purposes, to keep track of the generator weightsif test_generator:old_generator_weights = gen.gen[0][0].weight.detach().clone()### Update generator #### Hint: This code will look a lot like the discriminator updates!# These are the steps you will need to complete:# 1) Zero out the gradients.# 2) Calculate the generator loss, assigning it to gen_loss.# 3) Backprop through the generator: update the gradients and optimizer.#### START CODE HERE ####gen_opt.zero_grad()gen_loss = get_gen_loss(gen, disc, criterion, cur_batch_size, z_dim, device)gen_loss.backward(retain_graph=True)gen_opt.step()#### END CODE HERE ##### For testing purposes, to check that your code changes the generator weightsif test_generator:try:assert lr > 0.0000002 or (gen.gen[0][0].weight.grad.abs().max() < 0.0005 and epoch == 0)assert torch.any(gen.gen[0][0].weight.detach().clone() != old_generator_weights)except:error = Trueprint("Runtime tests have failed")# Keep track of the average discriminator lossmean_discriminator_loss += disc_loss.item() / display_step# Keep track of the average generator lossmean_generator_loss += gen_loss.item() / display_step### Visualization code ###if cur_step % display_step == 0 and cur_step > 0:print(f"Epoch {epoch}, step {cur_step}: Generator loss: {mean_generator_loss}, discriminator loss: {mean_discriminator_loss}")fake_noise = get_noise(cur_batch_size, z_dim, device=device)fake = gen(fake_noise)show_tensor_images(fake)show_tensor_images(real)mean_generator_loss = 0mean_discriminator_loss = 0cur_step += 1

以上~

参考文献

【1】Generative Adversarial Networks (GANs) by DeepLearning.AI

【2】Ian J. Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, Yoshua Bengio。[GAN] generative adversarial networks. NIPS 2014.

【3】 https://zhangruochi.com/Your-First-GAN/2020/10/09/